Home/ Blog / Generative AI Risks in IT and Cybersecurity for Financial Organizations

Generative AI tools have really come to the forefront of digital revolutionary advancements in the last couple of years. There is no doubt that these tools offer unprecedented capabilities in content creation and manipulation, but financial organizations find themselves facing increased risks. There is a darker side of Artificial Intelligence that must be addressed.

Hollywood’s portrayal of a robot apocalypse remains fictional. However, there are real concerns about job displacement, cybersecurity vulnerabilities, market disruptions, and document forgery. These are largely fueled by advancements in computing power, intelligent unsupervised algorithms, and the widespread use of generative AI applications such as chatbots.

In this blog, we delve into the multifaceted risks due to Generative AI in IT and cybersecurity for financial institutions.

Understanding Generative AI

Generative AI refers to Artificial Intelligence systems capable of generating content, such as images, text, or even entire datasets, with human-like characteristics. Unlike traditional AI, which relies on explicit programming, generative models learn from vast datasets to create new, often indistinguishable, content.

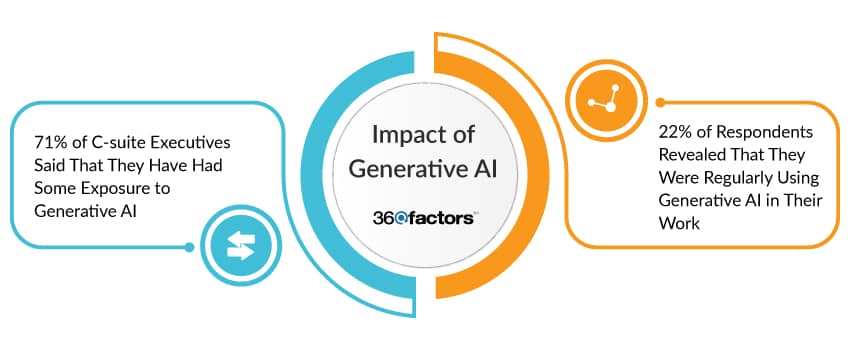

In a global survey of C-Suite executives in April 2023, McKinsey reported that seventy-nine percent of all respondents said that they had at least some exposure to generative AI, either for work or outside of work, and 22 percent said that they were regularly using it in their own work.

While this technology opens new frontiers in creativity and efficiency, it simultaneously introduces novel challenges, especially in the context of cybersecurity for financial organizations.

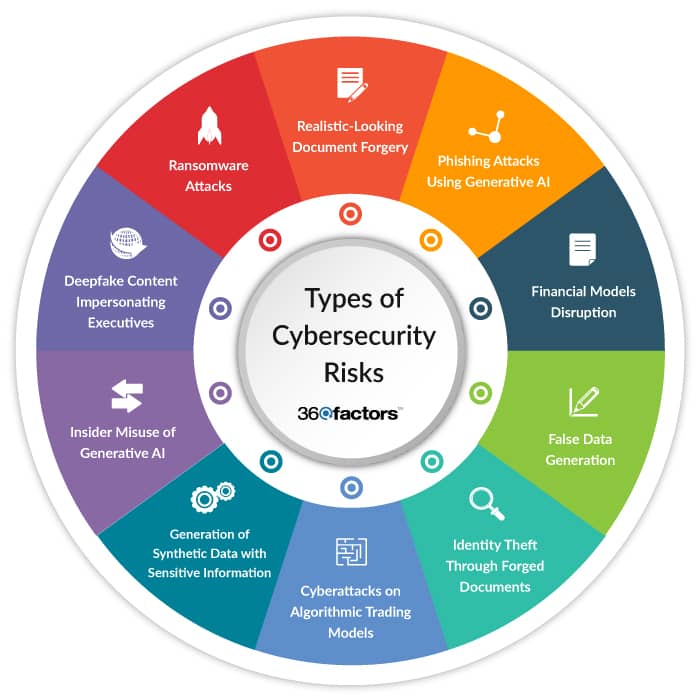

Types of Cybersecurity Risks

Let us consider some of the significant types of cybersecurity risks that are likely to impact financial organizations in the coming years.

Generation of Realistic-Looking Financial Documents or Transactions

Generative AI’s ability to mimic human-like patterns raises concerns about the creation of realistic financial documents or transactions for fraudulent purposes. This risk extends beyond traditional counterfeiting methods, as generative AI can produce documents that closely resemble legitimate financial records.

This not only poses a challenge for fraud detection systems but also increases the likelihood of unauthorized transactions going undetected. Financial organizations will need to enhance their document verification processes and employ advanced anomaly detection mechanisms to counter this evolving threat.

Phishing Attacks Using Generative AI

Phishing attacks have also evolved with the advent of generative AI, enabling cybercriminals to craft highly convincing emails or websites that are indistinguishable from legitimate financial institutions. The sophistication of these attacks increases the probability of users falling victim to phishing schemes, compromising sensitive information such as login credentials and financial details.

Financial organizations must implement robust email filtering solutions, conduct regular employee training on phishing awareness, and employ advanced website authentication mechanisms to counter this risk effectively.

Financial Models Disruption

Generative AI poses a unique risk through adversarial attacks aimed at manipulating financial models. These attacks involve subtle alterations to input data, tricking AI predictive models into generating inaccurate predictions or decisions.

In the context of financial organizations relying on algorithmic decision-making, such manipulations could lead to significant financial losses. Mitigating this risk requires constant monitoring of model behavior, implementing anomaly detection algorithms, and reinforcing model security protocols to withstand adversarial attacks.

False Data Generation

The capability of generative AI to create synthetic data raises the risk of false data generation, potentially leading to the manipulation of financial records or reports. Cybercriminals can exploit this vulnerability to deceive auditing processes, regulatory authorities, and internal stakeholders.

Financial organizations need to implement stricter data integrity verification mechanisms, conduct regular audits of generated data, and establish stringent controls over the usage and validation of synthetic data within their systems.

Identity Theft Through Forged Documents

Generative AI’s proficiency in forging documents also raises the risk of identity theft. Attackers can leverage this technology to produce highly convincing documents of individuals for fraudulent activities, gaining unauthorized access to sensitive financial information.

Financial organizations must bolster their identity verification processes, incorporating advanced biometric authentication methods and document validation techniques to ensure the authenticity of submitted documents and prevent identity theft.

Cyberattacks on Algorithmic Trading Models

Algorithmic trading models, prevalent in financial organizations, are susceptible to cyberattacks on generative AI systems. An attacker could manipulate generative models used in trading algorithms, influencing market behavior, and causing financial losses.

Safeguarding against this risk involves implementing robust security measures for algorithmic trading systems, continuous monitoring of market anomalies, and employing adaptive algorithms that can detect and respond to adversarial activities in real-time.

Generation of Synthetic Data with Sensitive Information

Generative AI’s creation of synthetic data also raises privacy concerns, especially given the large volumes of information collected by online apps and social media platforms.

Financial organizations must establish stringent controls over the generation and usage of synthetic data, ensuring compliance with data protection regulations such as GDPR and implementing encryption and access controls to safeguard sensitive information from unauthorized access.

Insider Misuse of Generative AI

Insiders within financial organizations may misuse generative AI to create fraudulent documents for personal gain or to exploit vulnerabilities. This risk underscores the importance of implementing robust insider threat detection mechanisms, conducting regular audits of user activities, and fostering a culture of Cybersecurity awareness to prevent and detect potential misuse of generative AI technology.

Deepfake Content Impersonating Executives

Generative AI’s ability to craft deepfake content poses a significant risk in the financial sector, especially due to the creation of fraudulent communications impersonating business executives. This risk could lead to misinformation, financial fraud, or reputational damage.

Financial organizations need to invest in deepfake detection technologies, establish secure communication channels, and conduct regular training programs to educate employees on identifying and reporting potential deepfake content.

Ransomware Attacks

The sophisticated capabilities of generative AI could be harnessed to craft targeted and sophisticated ransomware attacks. Financial organizations, being lucrative targets, must reinforce their cybersecurity systems by implementing robust ransomware protection mechanisms.

Organizations should also consider conducting regular data backups and enhancing employee training to recognize and thwart potential ransomware threats effectively.

Conclusion

As financial organizations continue to operate in the intricate landscape of Generative AI, a proactive approach to cybersecurity can help them remain ahead. The risks outlined above underscore the need for robust monitoring and assessment frameworks to safeguard against potential threats.

Predict360 IT Risk Assessment has emerged as a comprehensive solution that offers enhanced capabilities for monitoring and assessing IT risks arising from generative AI. By leveraging advanced analytics, real-time monitoring, and customizable reporting, Predict360 IT Risk Assessment empowers financial organizations to stay ahead of the evolving cyber threats and fortify their cybersecurity defenses.

In an era where AI innovation and risk coexist, Predict360 IT Risk Assessment Software can be a strategic ally, providing financial institutions with the tools they need to secure their digital future. As the financial landscape continues to evolve, embracing cutting-edge solutions becomes not just a choice but a necessity for ensuring the resilience and security of financial organizations in the face of generative AI risks.

Request a Demo

Complete the form below and our business team will be in touch to schedule a product demo.

By clicking ‘SUBMIT’ you agree to our Privacy Policy.